| Table of contents |

In this example, an integral equation is to be solved by representing the discretised operator with an 𝓗-matrix, whereby the 𝓗-matrix is constructed using a matrix coefficient function for entries of the equation system. Furthermore, for the iterative solver a preconditioner is computed using 𝓗-LU factorisation.

Problem Description

Given the integral equation

![\begin{displaymath} \int_0^1 \log|x-y| \mathbf{u}(y) dy = \mathbf{f}(x), \quad x \in [0,1] \end{displaymath}](form_67.png)

with ![$\mathbf{u} : [0,1] \to \mathbf{R}$](form_68.png) being sought for a given right hand side

being sought for a given right hand side ![$\mathbf{f} : [0,1] \to \mathbf{R}$](form_69.png) , the Galerkin discretisation with constant ansatz functions

, the Galerkin discretisation with constant ansatz functions

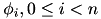

![\[ \phi_i(x) = \left\{ \begin{array}{ll} 1, & x \in \left[\frac{i}{n},\frac{i+1}{n} \right] \\ 0, & \mathrm{otherwise} \end{array} \right. \]](form_71.png)

leads to a linear equation system  where

where  contains the coefficients of the discretised

contains the coefficients of the discretised  and

and  is defined by

is defined by

The right hand side  is given by

is given by

![\[ f_i = \int_0^1 \phi_i(x) f(x) dx. \]](form_74.png)

- Remarks

- This example is identical to the introductory example in [BoerGraHac:2003b]}.

Coordinates and Cluster Trees

The code starts with the standard initialisation:

Together with the initialisation, the verbosity level of HLIBpro was increased to have additional output during execution. The corresponding function set_verbosity is available in the namespace HLIB::CFG.

For clustering the unknowns, coordinate information is to be defined. For this 1d example, the indices are placed in the middle of equally sized intervals.

Coordinates in HLIBpro are vectors of the corresponding dimension, e.g. one in for this problem. The set of all coordinates is stored in objects of type TCoordinate.

The usage of autoptr (see ???) is advised, and extensively used in HLIBpro, since it decreases the possibility of memory leaks by automatically deleting the objects upon destruction of the autopointer variable, e.g. when leaving the local block.

Having coordinates for each index, the cluster tree and block cluster tree can be constructed.

For the cluster tree, the partitioning strategy for the indices is determined automatically by TAutoBSPPartStrat. Furthermore, a  of size 20 is used during construction.

of size 20 is used during construction.

For the block cluster tree, the standard admissibility condition (see {eqn:std_adm}) implemented in TStdAdmCond is used with  .

.

Matrix Construction and Coefficient Function

Using the block cluster tree, finally the 𝓗-matrix can be constructed. For this, adaptive cross approximation (ACA, see ???) is applied to compute low rank approximations of admissibly matrix blocks. In HLIBpro, an improved version of ACA is implemented in TACAPlus.

ACA only needs access to the matrix coefficients of the dense matrix. Those are provided by TLogCoeffFn, which will be discussed below. Finally, the block wise accuracy of the 𝓗-matrix approximation has to be defined using TTruncAcc objects. In this case, an accuracy of  is used.

is used.

Matrix construction is one example of a parallelised algorithm in HLIBpro. For shared memory systems only the number of threads are needed to define the degree of parallelism. Here, the maximal number of threads available for the program is used, which is stored in mach_info.

The coefficient function used during matrix construction is implemented in class TLogCoeffFn.

It is derived from TPermCoeffFn, where the interface is defined for coefficient evaluation with permuted indices. The reordering is necessary, since due to the construction of the cluster tree the numbering of the indices in the 𝓗-matrix is usually different from the corresponding numbering in the application. The latter is referred to as the external numbering, whereas the first is called the internal numbering.

Going on with TLogCoeffFn, the step width for the grid is stored as an internal variable for kernel evaluation:

In the constructor, the permutation for mapping the internal to the external numbering are provided, both for rows and for columns, together with the step width:

The actual interface for the evaluation of the matrix coefficients is implemented by the function eval, which is defined in TPermCoeffFn and has to be overloaded:

This functions gets a set of indices for rows (rowidxs) and columns (colidxs) and a pointer to a memory block, where all the coefficients should be stored. The indices in both sets are already in the external numbering, hence the indices can directly be used for kernel evaluation. The mapping was performed by TPermCoeffFn. The memory layout of matrix is column wise, which holds for almost all data in HLIBpro. This is due to the memory layout of BLAS/LAPACK, originally implemented in Fortran, which uses column wise storage.

TPermCoeffFn is derived from TCoeffFn, which uses a slightly different evaluation interface based on index sets in the internal numbering. TPermCoeffFn overloads this method with the conversion between both numberings and implements the new eval method. To avoid compiler warnings about ``hidden functions'', the first version is brought into local scope by

By default, the format of the final matrix defined by the coefficients will be unsymmetric, e.g. lower and upper half of the matrix will be built. Please note, that this will not effect the actual algebraic matrix which may still be symmetric or hermtitian. Only the storage and subsequent algorithms are affected in terms of computational costs.

To change the matrix format, the function matrix_format has to be overloaded. In this case, as the matrix is symmetric, this looks like

Finally, TLogCoeffFn has to signal the value type, e.g. real or complex valued, to the matrix construction object with the method is_complex.

It should be noted, that for a complex coefficient function, to evaluation method is not called eval but ceval.

𝓗-LU Factorisation

Having constructed the 𝓗-matrix, the corresponding equation system shall be solved. In most cases, a standard iterative solve will not be sufficient, e.g. either the convergence rate is to bad or there is no convergence at all. Therefore, a (good) preconditioner is needed, with the inverse of  being the best possible. Since iterative schemes, only need matrix-vector multiplication, the corresponding functionality for the inverse is sufficient. This is provided by an (𝓗-) LU factorisation of

being the best possible. Since iterative schemes, only need matrix-vector multiplication, the corresponding functionality for the inverse is sufficient. This is provided by an (𝓗-) LU factorisation of  , or for the symmetric case, a LDL factorisation:

, or for the symmetric case, a LDL factorisation:

Since A is modified during the factorisation, a copy is constructed. The LDL factorisation is implemented in TLDL and makes use of multiple threads. The accuracy is chosen to be equal as during matrix construction.

B now contains the matrix data of the LDL factorisation, but computing the matrix-vector product is now no longer possible, since the special layout of the LDL data, e.g. diagonal and lower triangular part, is unknown to the matrix object itself. For this, an object of type TLDLInvMatrix has to be used, which allows the matrix-vector evaluation of the LDL factorisation stored in B. TLDLInvMatrix also has to know the format of  , e.g. symmetric or hermitian.

, e.g. symmetric or hermitian.

Iterative Solvers

Still missing is the right hand side of the equation system. In this example, it was chosen, such that  :

:

with rhs defined by

For the iterative solver TAutoSolver is used, which automatically chooses a suitable solver for the given combination of matrix and preconditioner. Furthermore, statistics about the solution process is stored in an object of type TSolver::TInfo, e.g. convergence rate, number of steps.

To check the accuracy of the computed solution, we compare it with the known exact solution:

The standard finalisation and catch block finishes the example:

1.8.1.2

1.8.1.2